Prof. Eyal Ofek

Chair of Computer Science

(HCI, Mixed Reality, Computer Vision)

Work e-mail: e.ofek@bham.ac.uk

Personal e-mail: eyal.ofek@gmail.com

Curriculum vitae (academic)

I am always looking for creative, passionate about technology, and self-motivated Ph.D. students (postdocs, Master’s students, and Visiting researchers are also welcome!).

If you are interested in working with me, please email me.

Please apply via the University application system here and mention my name on your application. For more detailed information (e.g., timeline, documents, funding, etc.), please refer to here.

About me

2024 Chair of Computer Science

University of Birmingham, UK

My research is in the areas of HCI, particularly using sensing and environment understanding, Mixed reality, and the use of technology to make collaboration easier and more inclusive.

Member of the School of Computer Science’s Perception, Language, and Action Theme

Member of the University of Birmingham Virtual Reality Lab.

2023 Computer Vision Specialist

Leading the vision/AI development.

Data Blanket – Real-time AI-Driven Fire Fighting

Wildfires result in the loss of thousands of lives, millions of acres, hundreds of billions of dollars in damage, and over 5% of global emissions every year. We build a system to empower firefighters with new tactical tools and information, and ensure that every part of this fighting system performs better.

2011-2023 Principal Researcher & Research Manager, Microsoft Research

My research focused on Human-Computer Interaction (HCI), sensing, and Mixed-Reality displays (MR) to enable users to reach their full potential in productivity, creativity, and collaboration. I have been granted more than 110 patents and published over 90 academic publications (with more than 14000 citations), and I was awarded a senior member of the Assoc. for Computing Machines (ACM).

In addition to publications and the transfer of technology to products, I have released multiple tools and open-source libraries, such as the RoomAlive Toolkit, used around the world for multi-projection systems, SeeingVR to enhance the use of VR for people with low vision, Microsoft Rocketbox avatars, MoveBox and HeadBox toolkits to democratize avatar animation, and RemoteLab for distributed user studies.

Academic service: I served on multiple conference committees (CHI, UIST, CVPR, and more ), as the paper chair of ACM SIGSPATIAL 2011, as the Specialty Chief Editor of Frontiers in Virtual Reality for the area of Haptics, and on the editorial board of IEEE Computer Graphics and Applications Journal (CG&A).

I have formed and led a new MR research group at Microsoft Research’s Extreme Computing lab. I envision MR applications as woven with the fabric of our lives rather than PC and mobile apps, limited to running on a specific device’s screen. Such applications must be smart enough to understand users’ changing physical and social contexts and flexible enough to adapt accordingly. We developed systems such as FLARE (Fast Layout for AR experiences) that were used by the HoloLens Team and inspired the Unity MARS product, or Triton 3D audio simulation used by Microsoft Games such as Gears of War 4, and is the base of Microsoft Acoustics. ILLUMIROOM, a collaboration with the Redmond Lab, was presented at the CES 2013 Keynote.

2005-2011 Research Manager, Bing Maps & Mobile Research Lab, Microsoft

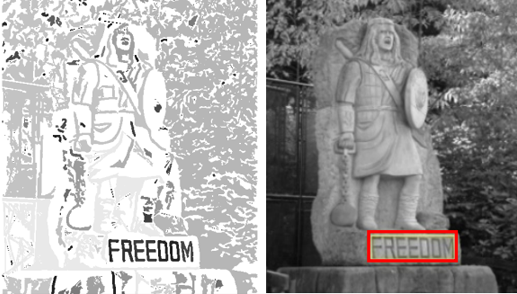

I formed the Bing Maps & Mobile Research Lab, where we combined generating world-class computer vision and graphics research while impacting the product. Among our results is the development of the influential text detector technology used by the BIng Mobile app and incorporated into OpenCV, the world’s first street-side imagery service, street-level reconstruction of geometry and texture pipeline, novel texture compression used by Virtual Earth 3D, and more.

Besides publishing in leading computer vision and graphics forums, our work was presented at TED 2010 and in the New York Times.

1996-2001 CTO (Software)

I oversaw the software and algorithms R&D of the world’s first time-of-flight video camera in a start-up company. I used cameras for applications such as TV depth keying and reconstruction, and it was the basis for the Depth cameras used by Microsoft HoloLens and MagicLeap HMD.

1985-1986 Bazbosoft –Founder

Development of the award-winning and popular Amiga photo-editing and drawing editor (Photon-Paint).

For more information, please see my LinkedIn profile.

Selected Talks

News

- Feb ’25 – “Handling Uncertainty in UAV Sensor Information using Bayesian Belief Network and Large Language Model” was published at the 1st Int. Conf. on Drones and Unmanned Systems (DAUS’ 2025)

- Jan ’25 – A Meta Project Aria Academic partner

- Oct. ’24 – Two papers, ‘Avatar Pilot’ and ‘VR Transformer’ presented at ISMAR 24

- Sep. ’24 – Joined the University of Birmingham as a Chair of CS.

- Apr. ’24 – The paper “Big or Small” won an Honourable Mention at CHI 2024.

- Mar. ’24 – I’m a co-editor of A special issue on haptics in the metaverse in the IEEE Transactions on Haptics Journal.

- Jan ’24 – Two papers have been accepted to CHI 2024

- July ’23 – Our paper “Beyond Audio” got the best paper at DIS 2023, CMU Pittsburgh, Pennsylvania.

- May ’23 – I joined DataBlanket, a startup that works on AI-Based Fire-Fighting

- Apr ’23 – “Embodying Physics-Aware Avatars in Virtual Reality” received Best Paper: Honorable mention at CHI 23

- Feb ’23 – “AdHocProx: Sensing Mobile, Ad-Hoc Collaborative Device Formations using Dual Ultra-Wideband Radios” got accepted to CHI 23

Research Interests

Adaptive Mixed Reality (MR) & AI

I see Mixed reality as a revolution beyond display technology. While traditional software is developed, tested, and used on standard devices, MR applications use the user environment as their platform. This requires such applications to be aware of the user’s unique context, physical environment, social interactions, and other applications. The rise of Machine Learning and large language models is an exciting opportunity to incorporate more localized and global knowledge into this process.

I examine new ways to design and implement such applications and their effects on our work and social interactions.

Sensing, Computer Vision, and Privacy

Another aspect of the technology revolution is the proliferation of sensors, which enable applications to better fit the user’s context and intent. Sensors can enable better interaction with devices as part of a holistic digital environment around the user, all focused on the user’s tasks and allowing a bridge between digital space and physical objects.

I see significant importance in designing sensing that can enable new capabilities while maintaining the user’s privacy. New sensors allow us to plan what part of the space is measured and use the minimalistic data granularity needed for the task.

Accessibility and inclusion of MR

Today, we can augment our senses in a way that enables us to be in a digital space, independent of the physical laws that limit us in Reality. This enables people to do more than they could have achieved in reality. It also enables leveling the plane field between users with different physical, social, or environmental limitations.

Haptics

Our experience of the real world is not limited to vision and audio. The limited rendering of touch sensation reduces MR’s realism today, and the effectiveness of using our hands when working in the space.

I have done extensive research on rendering haptics with other senses, designing novel hand-held haptic controllers that advanced the state-of-the-art of active haptic rendering and using scene understanding and manipulation of hand-eye -coordination effects of using the physical environment around the user for haptic rendering.

Avatars

In Virtual Reality and spatial computing simulations, avatars often represent humans.

I was working on issues such as creating avatars, controlling avatars using natural motions, and decoupling avatars’ motions from users’ motions for accessibility, productivity, and the users ‘ perception.

Academic Service

- Frontiers in Virtual Reality Specialty Chief Editor – Haptics (’20-’22)

- IEEE Computer Graphics & Applications (CG&A) Member of the Editorial Board

- ACM SIGSPATIAL 2011 Conference Paper Chair

- ACM CHI ’22, ’24, ’25 PC Member

- VRST ’23 PC Member

- ISMAR ’23, ’24 PC Member

- ACM UIST ’23 PC Member

- ACM SIGSPATIAL PC Member

- IEEE Computer Vision & Pattern Recognition (CVPR) PC Member

- Pacific Graphics PC Member

- ACM International Conference on Interactive Surfaces and Spaces (ISS) PC Member

- ACM Multimedia Conference (MMSYS) PC Member

- Microsoft Research Ph.D. Fellowship area chair

- Microsoft Research Ada Lovelace Fellowship area chair

- A visiting Professor. The School of Computer Science, Interdisciplinary Center, Herzliya, Israel 2002

Awards

- Best Paper: Honorable Mention, CHI 2024

- Best Paper, DIS 2023

- Best Paper: Honorable Mention Paper, CHI 2023

- Senior Member of the ACM 2022

- Best Paper, DIS 2021

- Best Paper: Honorable Mention Paper, CHI 2020

- Best Paper: Honorable Mention Paper, IEEE VR 2020

- Best Paper: Honorable Mentioned Demo, UIST 2019

- Best Paper, ISMAR 2019

- Best Paper: Honorable Mentioned Paper, CHI 2018

- Golden Mouse Award – Best Video Showcase, CHI 2016

- Best paper, CSCW 2016

- Golden Mouse Award – Best Video Showcase, CHI 2013

- Best paper, CHI 2013

- Best paper, UIST 2009

- Microsoft Star Developer, Microsoft Bing Maps 2006

- Charles Clor Scholarship, 1992

120+ Granted patents

2025

- Automatic Generation of Markers Based On Social Interaction, Issued Apr 1, US 12265580 B2

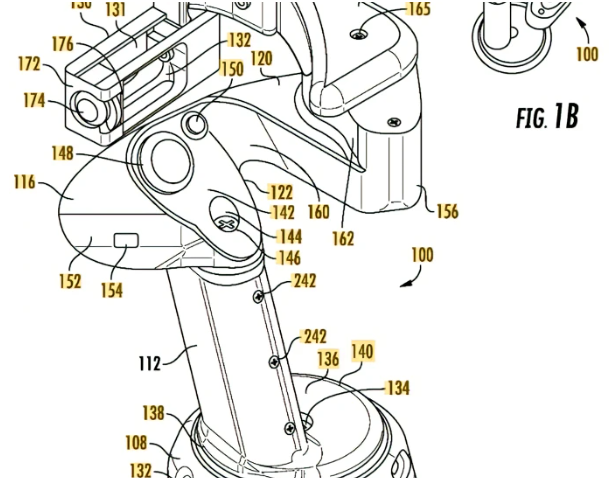

- Haptic controller, Issued Feb 11, US 12223109 B2

2024

- Representing Two Dimensional Representations As Three-dimensional Avatars, Issued Dec. 24, US 12175581 B2

- Mobile Haptic Robots, Issued Dec. 10, US 12161934 B2

- Presenting Augmented Reality Display Data In Physical Presentation Environments., Issued Nov. 12, US 12141927 B2

2023

- Headset Virtual Presence, Issued Oct. 17, US 11792364 B2

- Intuitive Augmented Reality Collaboration On Visual Data, Issued Oct. 10, US 11782669 B2

- Computing Device Headset Input., Issued Jun. 6, US 11669294 B2

- Multilayer Controller. Issued Jan. 17, US 11556168 B2

2022

- Haptic controller, Issued Jan 18, US 11226685

2021

- Three-dimensional object tracking to augment display area, Issued Nov 30, US 11,188,143

- Displacement oriented interaction in computer-mediated reality, Issued Sep 14, US, 11,119,581

- Second-person avatars, Issued Aug 10, US 11,087,518

- Haptic Rendering, Issued Aug 10, US 11086398

- Hover-based user-interactions with virtual objects within immersive environments, Issued Jul 20, US 11,068,111

- Real time styling of motion for virtual environments. Issued Jul 6, US 11055891

- Integrated mixed-input system. Issued Jul 6, US 11054894

- Annotation using a multi-device mixed interactivity system, Issued Jun 1th, US 11,023,109

- Blending virtual environments with situated physical reality. Issued May 11, US 11004269

- Deployable controller. Issued May 11, US 11003247

- Using eye tracking to hide virtual reality scene changes in plain sight, Issued Apr. 13, US 10,976,816

- Selection using a multi-device mixed interactivity system. Issued: January 19, US 10895966

- Reality-guided roaming in virtual reality. Issued January 5, US 10885710

2020

- Sensor device Issued Dec 29, US 10874035

- Virtual reality controller Issued Nov 17, US 10,838,486

- Resistance-based haptic device – Issued Sep 15, US 10,775,891

- Virtually representing spaces and objects while maintaining physical properties – Issued Jun 30, US 10,699,491

- Displacement Oriented Interaction in Computer-Mediated Reality – Issued Apr. 4th, US 10620710

- Controller with haptic feedback – Issued Apr 4th, US 10617942

2019

- Hover-based user-interactions with virtual objects within immersive environments – Issued Dec 24, US 10514801

- Syndication of direct and indirect interactions in a computer-mediated reality environment – Issued Sep 17, US 10417827

- Physical haptic feedback system with spatial warping. Issued Sep 17, US 10416769

- Virtually representing spaces and objects while maintaining physical properties. – Issued May 28, US 10304251

- Virtual object manipulation within a physical environment – Issued Aug 6, US 10373381

- Application programming interface for multi-touch input detection – Issued May 14, US 10289239

- Dynamic haptic retargeting – Issued May 14, US 10,290,153

- Block view for geographic navigation – Issued Geb 26, US 10,215,585

- Projecting a virtual copy of a remote object – Issued Feb 26, US 10216982

2018

- Tangible three-dimensional light display – Issued Jul 3, US 10013065

- Layout design using locally satisfiable proposals – Issued May 1, US 9959675

- 3D haptics for interactive computer systems – Issued Mar 3, US 9916003

2017

- Dynamic haptic retargeting – Issued Oct 31, US 9805514

- Protecting privacy in web-based immersive augmented reality – Issued Jun 13, US 9679144

- Visualizing video within existing still images – Issued Mar 14, US 9,594,960

2016

- Immersive display with peripheral illusions – Issued Nov 1, US 9480907

- Second-person avatars – Issued Sep 9, US 9436276

- Transitioning between top-down maps and local navigation of reconstructed 3-D scenes – Issued Aug 23, US 9,424,676

- Managing access by applications to perceptual information – Issued May 31, US 9355268

- Block view for geographic navigation – Issued Mar 29, US 9298345

- Detecting text using stroke width based text detection – Issued Jan 12, US 9,235,759

2015

- Data difference guided image capturing, Issued Nov 10, US 9,183,465

- Contour completion for augmenting surface reconstructions – Issued Oct 27, US 9171403

- City scene video sharing on digital maps – Issued Oct 20, US 9167290 (US-2012-0262552-A1)

- Scrubbing variable content paths – Issued Sep 29, US 9146119

- Interactive geo-positioning of imagery – Issued Sep 1, US 9123159

- Map editing with little user input – Issued Aug 18, US 9110921

- Cognitive agent – Issued Aug 4, US 9,100,402

- Audio presentation of condensed spatial contextual information – Issued May 12, US 9032042

- Navigation instructions using low-bandwidth signaling – Issued Apr 14, US 9008859

- Removal of rayleigh scattering from images– Issued Mar 3, US 8970691

- Spatial image index and associated updating functionality – Issued Mar 3, US 8971641

- Selective spatial audio communication – Issued Feb 17, US 8,958,569

- Providing routes through information collection and retrieval – Issued Feb 10, US 8,954,266

- Annotating or editing three-dimensional space – Issued Jan 27, US 8,941,641

2014

- Detecting text using stroke width-based text detection – Issued Dec 23, US 8917935

- Viewing media in the context of street-level images – Issued Sep 9, US 8,831,380

- Simulated video with extra viewpoints and enhanced resolution for traffic cameras – Issued Sep 2, US 8823797

- Automated fitting of interior maps to general maps– Issued Aug 26, US 8817049

- Calibration and annotation of video content – Issued Jul 1, US 8769396

- User interfaces for interacting with top-down maps of reconstructed 3-D scences – Issued Jun 8, US 8773424

- Geo-relevance for images – Issued Jul 8, US 8,774,520

- Path queries – Issued Apr 8, US 8694383

- Image-based localization for addresses – Issued Apr 1, US 8688368

- Electromechanical surface of rotational elements for motion compensation of a moving object – Issued Mar 18, US 8675018

- Virtual closet for storing and accessing virtual representations of items – Issued Feb 4, US 8645230

- Adjustable and progressive mobile device street view – Issued Jan 28, US 8640020

- View generation using interpolated values – Issued Jan 21, US 8633942

- Transitioning between top-down maps and local navigation of reconstructed 3-D scenes – Issued Jan 7, US 8624902

2013

- Spatially registering user photographs – Issued Dec 17, US 8,611,643

- Computing transitions between captured driving runs – Issued Nov 12, US 8581900

- Shadow detection in a single image – Issued Nov 5, US 8,577,170

- Detection of objectionable videos – Issued Nov 1, US 8549627

- Filter and surfacing virtual content in virtual worlds – Issued Oct 29, US 8570325

- Automatic generation of markers based on social interaction– Issued Oct 15, US 8560515

- Geographic data acquisition by user motivation – Issued Oct 8, US 8550909

- Visual assessment of landmarks – Issued Oct 1, US 8,548,725

- Data difference guided image capturing– Issued Aug 6, US 8503794

- Hybrid mobile phone geopositioning – Issued Jul 23, US 8,494,566

- Validating user generated three-dimensional models, Issued Jul 16, US 8,487,927

- Mobile and server-side computational photography – Issued Jul 16, US 8,488,040

- Depersonalizing location traces – Issued Jun 11, US 8463289

- Panoramic ring user interface – Issued May 28, US 8453060

- Viewing media in the context of street-level images – Issued May 11, US 8447136

- Camera-based multi-touch mouse – Issued May 21, US 8446367 (US-2010-0265178-A1)

- Data driven interpolation using geodesic affinity – Issued May 21, US 8447105

- Identifying physical locations of entities – Issued May 14, US 8442716

- Tagging video using character recognition and propagation – Issued Apr 30, US 8433136

- Cognitive agent – Issued Apr 23, US 8,428,908

2012

- Techniques for robust color transfer – Issued Dec 25, US 8,340,416

- Geo-relevance for images – Dec 4, US 8,326,048

- Generating a texture from multiple images – Nov 27, US 8,319,796

- Validation and correction of map data using oblique images – Nov 13, US 8,311,287

- Annotating images with instructions– Issued Oct 30, US 8301996

- Method, medium, and system for ranking dishes at eating establishments – Issued Oct 23, US 8296194

- Spatially registering user photographs – Issued Oct 23, US 8295589

- Map aggregation – Issued Sep 11, US 8266132

- Visualizing camera feeds on a map – Issued Aug 7, US 8237791

- Factoring repeated content within and among images– Issued Jun 19, US 8204338

- Importance guided image transformation – Issued Jun 12, us 8200037

- Cognitive agent– Issued Jun 5, US 8195430

- Geocoding by image matching – Issued May 29, US 8189925

- Flexible matching with combinational similarity – Issued May 22, US 8,184,911

- Generating a texture from multiple images – Issued Feb 28, US 8125493

- Dynamic map rendering as a function of a user parameter – Issued Jan 24, US 8103445

- Smart navigation for 3D maps – Issued Jan 17, US 8098245

2011

- Hybrid maps with embedded street-side images – Issued Dec 27, US 8085990

- Visual assessment of landmarks – Issued Nov 15, US 8060302

- Semi-automatic plane extrusion for 3D modeling– Issued Nov 15, US 8059888

- Augmenting images for panoramic display – Issued Aug 30, US 8009178

- Geotagging photographs using annotations– Issued Aug 2, US 7991283

- Multi-directional image displaying device– Issued Jun 28, US 7967451

- Flexible matching with combinational similarity – Issued Jun 7, US 7957596

- Camera based orientation for mobile devices – Issued May 24, US 7946921

- Displaying images related to a requested path– Issued May 10, US 7941271

- Landmark-based routing – Issued Mar 22, US 7912637

- Techniques for decoding images of barcodes– Issued Feb 15, US 7886978

- Image completion – Issued Feb 15, US 7889947

2010

- Camera and acceleration-based interface for presentations – Issued Dec 14, US 7,852,315

- Street-side maps and paths– Issued Nov 23, US 7840032

- Modeling and texturing digital surface models in a mapping application– Issued Nov 9, US 7831089

- System for guided photography based on image capturing device rendered user recommendations according to embodiments – Issued Sep 28, US 7805066

- Mode information displayed in a mapping application – Issued Aug 17, US 7777648

- Video enhancement, Issued June 16, US 7,548,659

2009

- Remote control of on-screen interactions – Issued Jan 13, US 7477236

Invited talks

- Invited Talk, Laboratory of Robotics and Engineering Systems (LARSyS) Annual Meeting, Lisbon, Portugal, July 2023

- ‘TaskVerse’, Cambridge, UK, July 2023

- Virtual Keynote, Harward AR/VR Symposium, Dec 2022

- Virtual Keynote, RSS ’22: Toward Robot Avatars: Perspectives on the ANA Avatar XPRIZE Competition.

- Invited Talk, Smart Haptics 2021, Dec 2021

- Interview, Microsoft Research Tech Minutes, Nov 2021

- Microsoft Research Seminar, Enhancing mobile work and productivity with VR, Dec 2020

- Invited talk, Behind the scenes with Microsoft: VR in the Wild, Global XR Bootcamp, Nov 2020

- Invited Talk, Haptics in AR and VR, Frontiers in Virtual Reality editors seminar series, May 2020

- Interview, The 21st, NPR, Feb 2020

- Invited Plenary Talk, 15th CSL Student Conference, University of Illinois at Urbana-Champaign, Feb 2020

- Interview and demo, Microsoft Research Faculty Summit, Jul. 2019

- Interview, Microsoft Research Podcast, Sep. 2019

- Interview, BBC News: May 2014

- Invited Talk, AWE Augmented World Expo June 2013

- Demo to the Israeli President and Peace Nobel Laureate, Mr. Simon Peres. Dec 2013

- Interview, Israel TV Channel 10, Nov 2011

- Keynote, Com.Geo, Washington DC, May 2011

- Demo, TED 2010 (Presented by Bing Maps & Mobile Architect, Blaise Arcas y Arcas), 2010

- Invited Talk, ThinkNext, Tel Aviv, Israel, 2010

- Panelist, “Location, location, location,” 6Sight, Monterey, CA 2007

Media Coverage

- Oct 2021: NATURE Device & Materials Blog: “Building the next generation of Shape Displays.”

- Oct 2020 Microsoft Research Blog: Physics matters: Haptic PIVOT, an on-demand controller, simulates physical forces such as momentum and gravity

- Oct 2020 Engadget: “Microsoft explores realistic VR haptics with a wrist-mounted gadget.”

- Oct 2020 Gizmodo: “These Wrist-Worn Hammers Swing Into Your Hands, So You Feel Virtual Objects”

- Aug 2020 VentureBeat: “Researchers bring Google Sheets and Microsoft Excel into VR.“

- May 2020 Microsoft Research – Research Collection: Tools and Data to Advance the State of the Art

- Apr. 2020 Microsoft Research Blog: Bringing virtual reality to people who are blind with an immersive sensory-based system

- Mar. 2020 Microsoft Research Blog: Release Microsoft Rocketbox Avatars library as open source

- Feb. 2020 NPR, The 21st: Using Virtual Reality To Help People With Disabilities.

- Jan. 2020 Scientific American: “Virtual Reality Has an Accessibility Problem”

- Oct. 2019 Microsoft Research Blog: A new era of spatial computing brings fresh challenges and solutions to VR.

- Oct 2019 Daily Mail: Microsoft reveals its DreamWalker VR rig that lets users explore a virtual world while walking around in real life.”

- Oct 2019 ZDnet: “Dream or nightmare? Microsoft’s VR swaps your real walking route for a virtual one.”

- Oct 2019 Gizmodo: “Microsoft’s Over-the-Top VR Rig Lets You Explore a Virtual World While Walking IRL“

- Oct 2019 Engadget: “Microsoft’s latest VR experiment is a literal walk in the park.”

- Oct 2019 Ars Technica: “Microsoft’s DreamWalker VR turns your daily commute into a totally different one.”

- Oct 2019 RoadToVR: “Microsoft ‘DreamWalker’ Experiment Takes First Steps into Always-on World-scale VR”

- May 2019 Microsoft Research Blog: Introducing TORC: A rigid haptic controller that renders elastic objects

- May 2019 VentureBeat: “Microsoft’s TORC will let you feel squeezable objects in AR and VR.”

- Apr. 2019 Microsoft Research Blog: Advancing accessibility on the web, in virtual reality, and in the classroom

- Apr. 2019 Engadget: “Microsoft is making VR better for those with vision problems.”

- Apr. 2019, RoadToVR: “Microsoft Aims to Improve VR for Users with Vision Problems.”

- Mar. 2019 Microsoft Research Blog: Giant steps and liberating spaces – Virtual Reality is making cool moves

- Apr. 2018 Microsoft Research Blog: Uncanny Valley and the Sense of Touch

- Mar. 2018 Microsoft Research Blog: Touching the Virtual: How Microsoft Research is Making Virtual Reality Tangible

- Mar 2018, Co.Design: “Crazy Microsoft is the best Microsoft.”

- Mar 2018 Engadget “Microsoft’s mad scientists are making AR more tactile.”

- Oct 2016 RoadToVR: “Microsoft Research Demonstrates VR Controller Prototypes With Unique Haptic Technology”

- May 2016, Game Developer: “Microsoft’s new haptic VR tech blurs the lines between realities.”

- Mar 2014, PCWorld: “When a monitor just isn’t big enough, try Microsoft’s SurroundWeb.”

- Mar 2014, The Telegraph: “Microsoft Research unveils 3D browser concept.”

- Mar 2014 Daily Mail: “Turn your entire room into a SCREEN: Microsoft tool lets you browse the web and beam videos onto the walls of your home.”

- Oct 2014 Washington Post: “Why it matters that Microsoft is channeling the Star Trek holodeck”

- Oct 2014 CNet: “Microsoft’s RoomAlive turns your room into a Holodeck.”

- Apr 2013 NBC News: “The IllumiRoom is Xbox’s Proto-Holodeck”

- Apr 2013 The Verge: “Microsoft IllumiRoom is a coffee table projector designed for the next-generation Xbox“

- Jan 2013 CES SAMSUNG Keynote presents our Illumiroom (From 51:58)

- Feb 2010 Flickr Blog: “Flickr, Flickr, Everywhere“

- Feb 2010 Bing Blog: “TED2010: Spatial Search – Bing, Flickr, Videos, Maps, and the Stars”

Teaching

- Visualization 2024/2025

- Robot Vision (Partial) 2024/2025