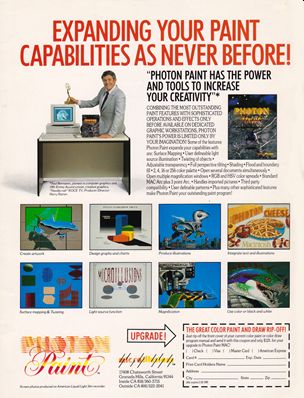

Photon Paint I & II, Spectra Color (1987-1989)

Photon Paint was an image manipulation & drawing application, first released in 1987 for the Commodore Amiga, followed by Photon Paint II in 1988, and later by Photon paint Macintosh.

Developed by Bazbosoft (Oren Peli, Eyal Ofek & Amir Zbeda) and distributed by MicroIllusion, It has been purchased by ~33% of all Amiga owners worldwide!

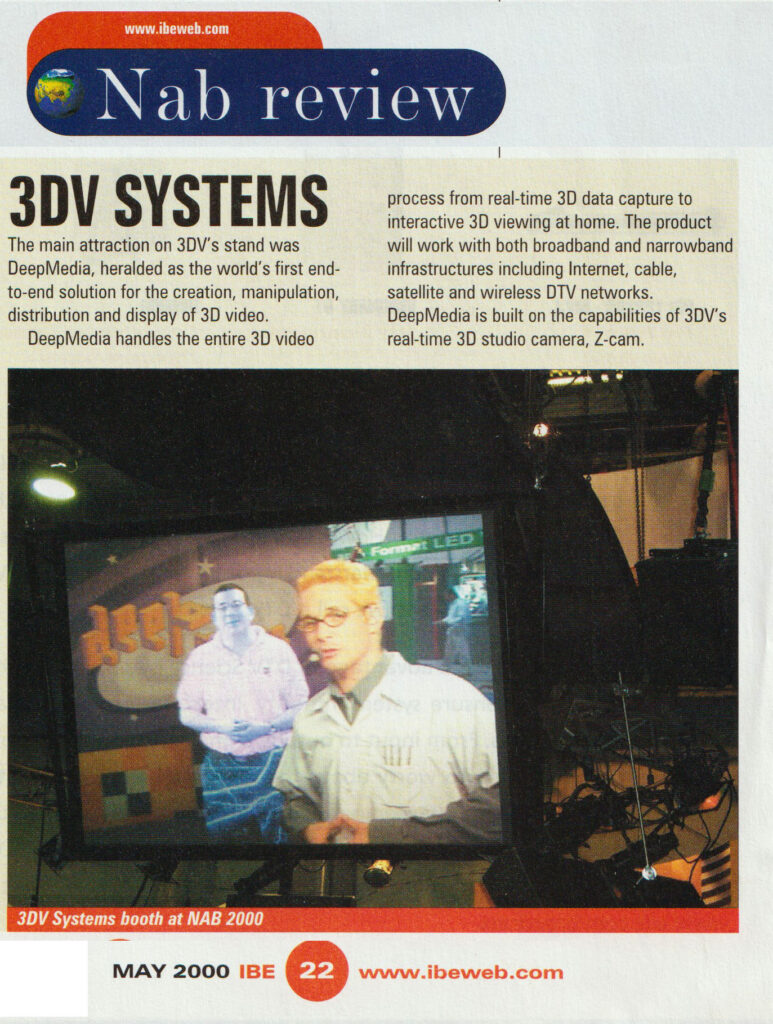

ZCAM (1996-2003 – World First TOF RGBD camera

ZCam was the first video of time-of-flight camera products for video applications by Israeli developer 3DV Systems. The ZCam supplements full-color video camera imaging with real-time range imaging information, allowing for video capture in 3D. Microsoft bought the company, and the technology was incorporated in Hololens.

I was in charge of all software and algorithms for the camera and its application from its start in 1996 till 2004.

Awards:

NAB 1999 – Best of show.

Videography 1999 – Editors’ Choice

Television Broadcast 1999 – Editor’s Pick

Advance Imaging – Solution of the year 1999.

JVC, Wikipedia, WideScreen Review

NAB reviews (ASCII JApan 1999, IBE 2000)

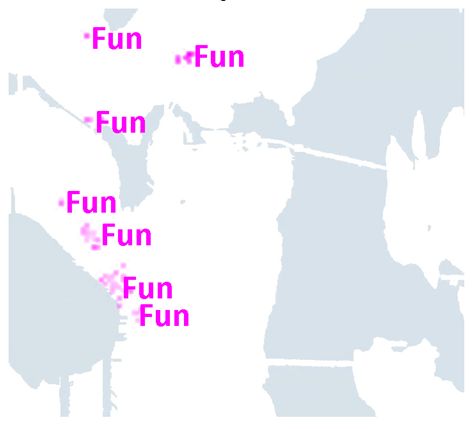

World First StreetSide Service (2006)

Local.live Street Side Technical Preview – Feb. 2006

Four months after moving from Microsoft research to Virtual Earth, we released a technical preview that showed Street Side images in Seattle and San Francisco centers. The preview allows the user to ‘drive’ a car in the streets and view images of the streets. This was the first online service for immersive 360 experiences of the street. All the project was developed by me, with the help of B. Snow (web page programming) and R. Welsh (Graphic design).

Automatic geo-positioning of Flickr’s images (2010)

M. Kroepfl and I worked on matching user images to Bing maps street images. The result was shipped as a Bing Maps application on Feb 2010 and was shown at TED 2010.

TED talk by Blaise Aguera y Arcas.

Geek in Disguise, Search Engine Land, Tech Flash, Bing Blog, Flickr

Semantically tag maps with crowd data (2010)

I worked on attaching semantic tags to maps based on labels like those found in Flickr™ images. A service based on this work was demonstrated at Where 2.0 2011 and is accessible on the web.

Microsoft Touch Mouse (2011)

Microsoft Touch Mouse – Aug. 2011

Although I was NOT involved in the development of the product, it is based on a work started by Hrvoje Benko and myself and was later incorporated in our UIST paper and in our Patent.

Illumiroom (2013)

FLARE (2014)

Flare is a rule-based system for generating object layouts for AR applications.

Part of its technology is incorporated into the Unity Game engine’s project MARS.

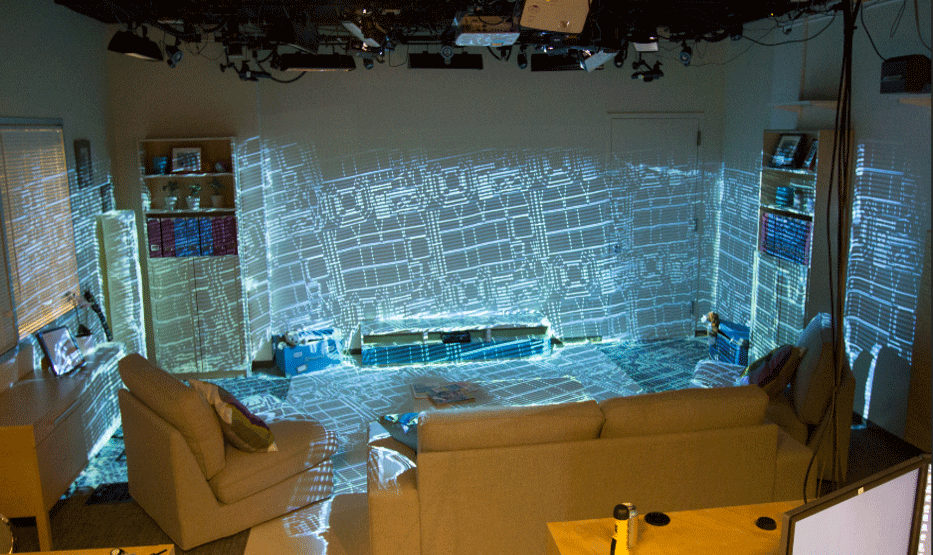

Room Alive 2014

RoomAlive is a proof-of-concept prototype that envisions a future of interactive gaming with projection mapping. RoomAlive transforms any room into an immersive, augmented entertainment experience through video projectors. Users can touch, shoot, stomp, dodge, and steer projected content that seamlessly co-exists with their existing physical environment. RoomAlive builds heavily on our last research project, IllumiRoom, which explored interactive projection mapping surrounding a television screen. IllumiRoom was largely focused on display, extending traditional gaming experiences from the TV. RoomAlive instead focuses on interaction and the new games we can create with interactive projection mapping. RoomAlive looks farther into the future of projection mapping and asks what new experiences we will have in the next few years.

Description of Projection Mapping. Video

An open-source SDK enables developers to calibrate a network of multiple Kinect sensors and video projectors. The toolkit also provides a simple projection mapping sample that can be used to develop new immersive augmented reality experiences similar to those of the IllumiRoom and RoomAlive research projects.

The RoomAlive Toolkit is provided as open source under the MIT License.

The code is available for download at GithHub: https://github.com/Kinect/RoomAliveToolkit.

B. Lower and A. Wilson gave a talk on the RoomAlive Toolkit for BUILD 2015.

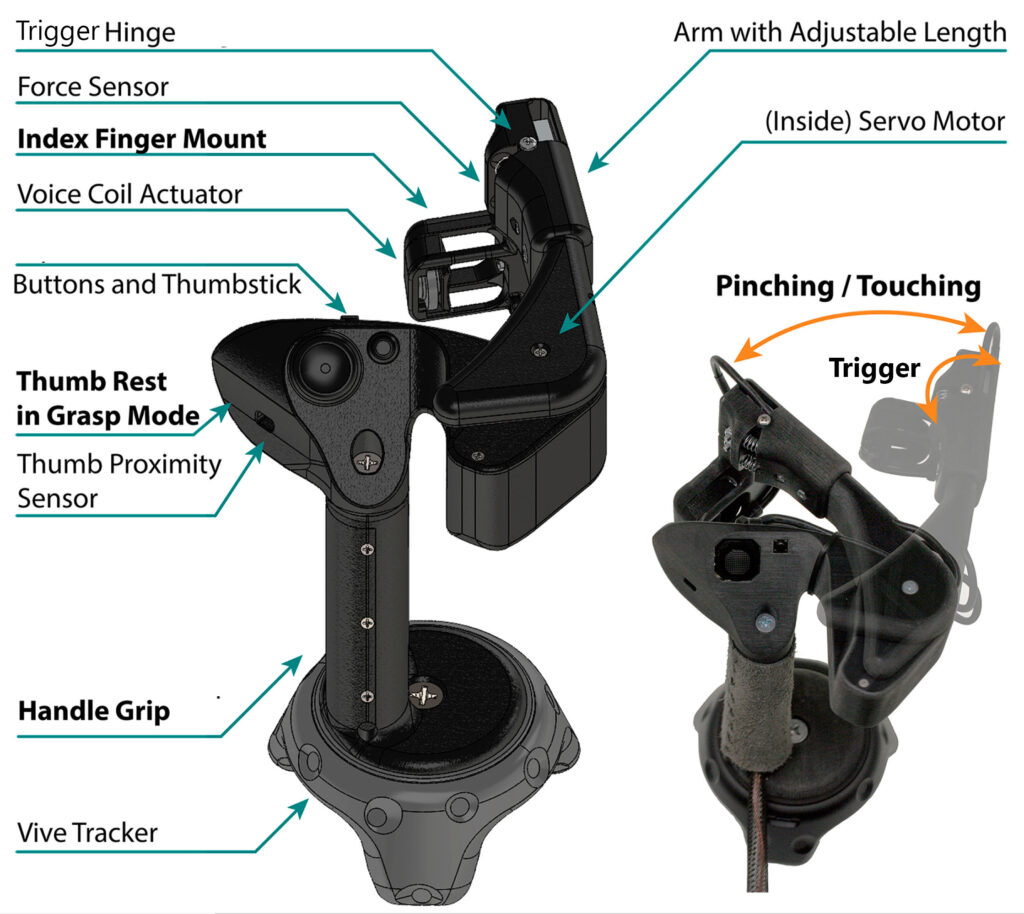

Haptic controllers (2017-current)

Haptic Controllers (2017 – current)

Virtual Reality (VR) and Augmented Reality (AR) have progressed dramatically in the past 30 years. Today, we can wear a consumer head-mounted display and experience fantastic worlds populated with rich geometry and beautifully realistically rendered virtual objects. 3D audio plays sound in our ears as if they are generated by virtual sources in space and may adapt as we move around the space. However, whenever we try to reach our hand and touch any virtual object, the illusion will break; it is only a mirage, and our hand will touch or grasp air.

Unlike consumer devices’ visual and audio rendering capabilities, their tactile offering is mainly limited to a simple buzz – a vibration feeling generated by an internal motor or an actuator buried inside the controllers. Although many research works aim to render different tactile sensations, they have not reached consumers. Reasons for that are many; laboratory prototypes such as exoskeletons and other hand-mounted devices may require a cumbersome procedure to fit users, put them on, or take them off. Many prototype devices can simulate only a specific sensation, such as texture, heat, and weight, that may not be general enough to attract users. Complex mechanics involving many motors may render the device too expensive, big, or fragile to be a consumer product.

We have been exploring several ways in which technology can generate a wide range of haptic sensations that may fit within held Virtual Reality controllers, not unlike the ones currently being used by consumers. Enabling users to touch and grasp virtual objects, feel the sliding of their fingertips on the surface of the objects, and more. The ultimate goal is to allow users to interact with the virtual digital world more naturally than ever before.

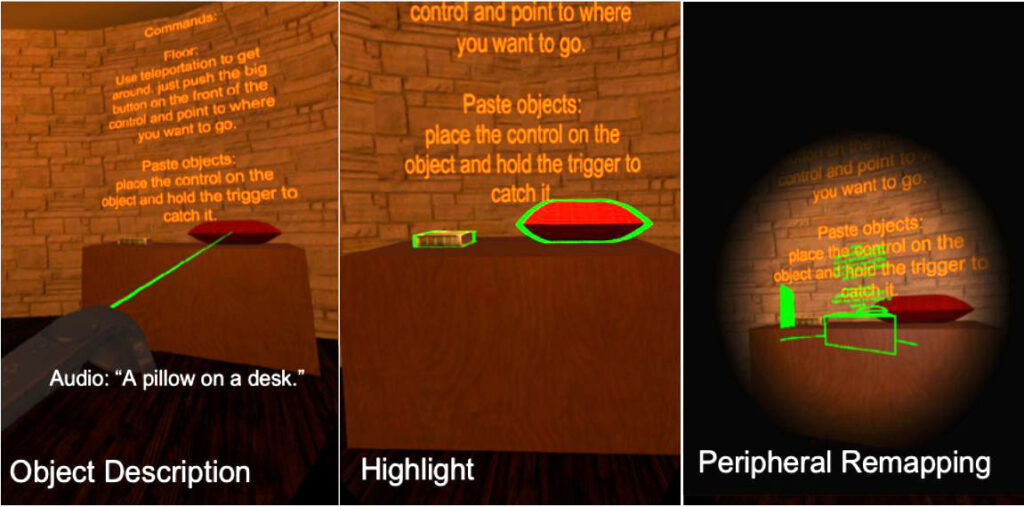

SeeingVR Toolkit (2019)

Current virtual reality applications do not support people with low vision, i.e., vision loss that falls short of complete blindness but is not correctable by glasses. We present SeeingVR, a set of 14 tools that enhance a VR application for people with low vision by providing visual and audio augmentations.

The code is available for download at GithHub .

Video, Demo at Microsoft Research Faculty Summit 2019, SeeingVR. Zhao et. al/ CHI 2019

Related publications

Virtual reality without vision: A haptic and auditory white cane to navigate complex virtual worlds (2020)/ Siu et al. CHI 2020 Honorable Mention paper.

Accessible by Design: An Opportunity for Virtual Reality/Mott et al. 2019 Workshop on Mixed Reality and Accessibility

VR & AR in the wild (2014-Current)

New inside-out tracking HMDs allow users to wander through large environments using continuous inside-out optical tracking, allowing applications to spread over large spaces and time intervals. For example, a user may play a multiplayer game in multiple rooms or outdoors, or a group of workers may wander through a large space and share the same content. We explore the technologies required to enable this future and some vertical example applications.

Related publications

SurroundWeb: Mitigating Privacy Concerns in a 3D Web Browser. IEEE Security & Privacy 2015

Spatial Constancy of Surface-Embedded Layouts across Multiple Environments 2015

Generating On-The-Fly VR Experiences While Walking inside Large, Unknown Real-World Building Environments. IEEE VR 2019

Avatars

Inside Virtual Reality (VR), users are represented by avatars. When the avatars are collocated from in first-person perspective, users experience what is commonly known as embodiment. When doing so, participants feel that the self-avatar has substituted their own body and that the new body is the source of the sensations. Embodiment is complex as it includes body ownership over the avatar, agency, co-location, and external appearance. Despite the multiple variables that influence it, the illusion is quite robust. It can be produced even if the self-avatar is of different age, size, gender, or race from the participant’s own body.

Our research with avatars has tried to push forward the boundaries of avatars, how they are perceived, how users behave when interacting with avatars, the basis of self-recognition on avatars, how avatars impact our locomotion in VR, and how they change our motor actions, all from both the computer graphics and the human-computer interaction sides.

This line of research on avatars also aims to understand further effects on psychological and neuroscience theories.

We have released and contributed Three main open-source projects to the community as part of our effort.

Open Source Microsoft Rocketbox library.

Paper at Frontiers of Virtual Reality