RoomAlive Toolkit

The RoomAlive Toolkit enables creation of dynamic projection mapping experiences. This toolkit has been in internal use at Microsoft Research for several years and has been used in a variety of interactive projection mapping projects such as RoomAlive, IllumiRoom, ManoAMano, Beamatron and Room2Room.

The toolkit consists of two separate projects:

- ProCamCalibration – This C# project can be used to calibrate multiple projectors and Kinect cameras in a room to enable immersive, dynamic projection mapping experiences. The code base also includes a simple projection mapping sample using Direct3D.

- RoomAlive Toolkit for Unity – RoomAlive Toolkit for Unity contains is a set of Unity scripts and tools that enable immersive, dynamic projection mapping experiences, based on the projection-camera calibration from ProCamCalibration. This project also includes a tool to stream and render Kinect depth data to Unity.

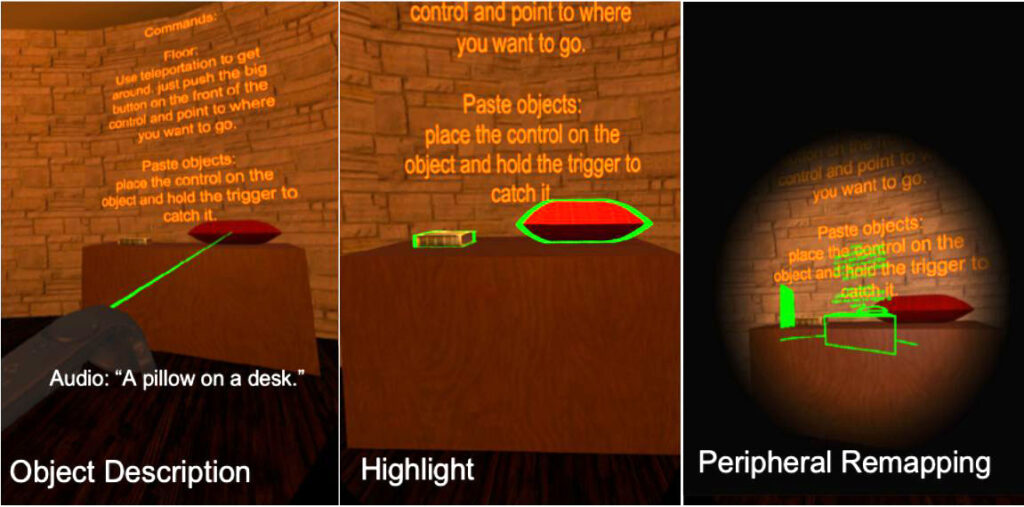

SeeingVR toolkit

2019

Current virtual reality applications do not support people who have low vision, i.e., vision loss that falls short of complete blindness but is not correctable by glasses. We present SeeingVR, a set of 14 tools that enhance a VR application for people with low vision by providing visual and audio augmentations

Microsoft Rocketbox Avatars

The Microsoft Rocketbox Avatar library consists of 115 characters and avatars fully rigged and with high definition that was developed over the course of 10 years. The diversity of the characters and the quality of the rigging together with a relatively low-poly meshes, makes this library the go-to asset among research laboratories worldwide from crowd simulation to real-time avatar embodiment and social Virtual Reality (VR). Ever since their launch, laboratories around the globe have been using the library and many of the lead authors in the VR community have extensively used these avatars during their research.

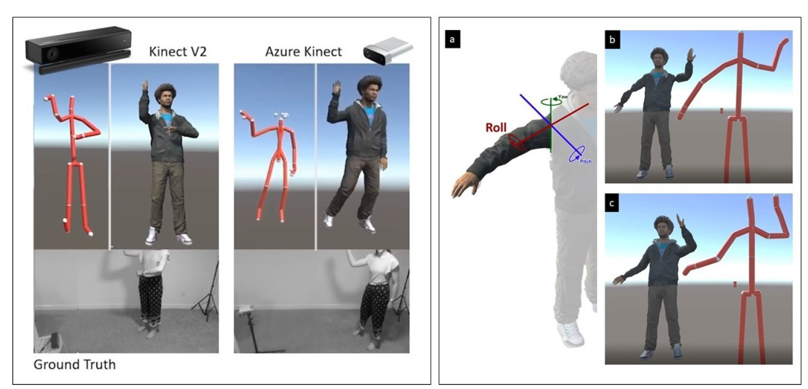

MoveBox for Microsoft Rocketbox

Nov 2020

MoveBox is a toolbox to animate the Microsoft Rocketbox avatars using motion captured (MoCap). Motion capture is performed using a single depth sensor, such as Azure Kinect or Windows Kinect V2. Our toolbox enables real-time animation of the user’s avatar by converting the transformations between systems that have different joints and hierarchies.

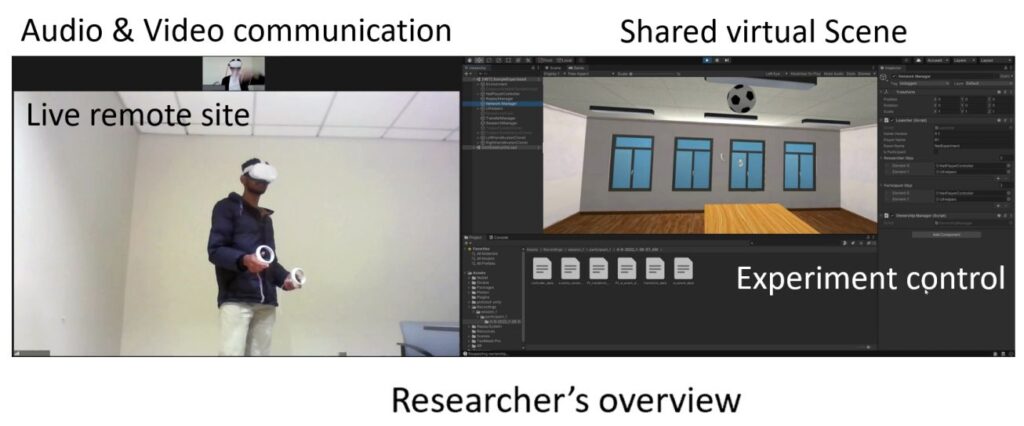

RemoteLab

Nov 2022

The XR Remote study toolkit is a toolkit for Unity that allows users to record and replay gameplay from the Unity editor window. The toolkit currently allows users to track the changes in Transform for each GameObject in the scene, record UI events (Toggle, Button, and Slider events), note changes in user-defined variables, and implement custom Likert scale surveys and the raw NASA-TLX survey.

This work was done as a collaboration between Microsoft Research and researchers from University of Washington, Vanderbuilt University and UIUC. The work is described in a paper presented at UIST 2022, Bend, Oregon.

CityLifeSim

CityLife is a flexible, high-fidelity simulation that allows users to define complex scenarios with essentially unlimited actors, including both pedestrians and vehicles. This tool allows each vehicle and pedestrian to operate with basic intelligence that governs the \emph{low-level} controls needed to maneuver, avoiding collisions, navigating corners, stopping at traffic lights, etc. The high-level control for each agent then allows the user to define behaviors in an abstract form controlling their sequence of actions (e.g., hurry to this intersection, then cross the road, turn left at the park, following that wait for a bus at the stop, etc.), their speed changes in different legs of the journey, their stopping distances, their susceptibility to be influenced by their environment and their risk-taking behavior.

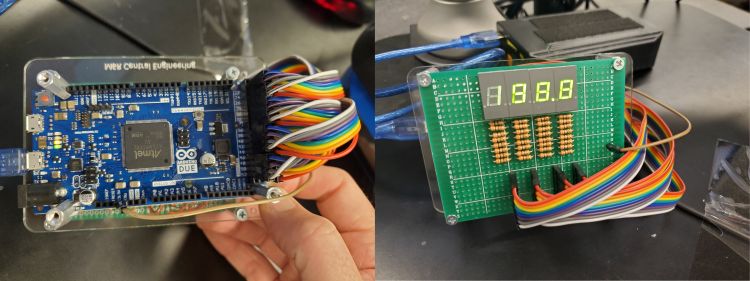

Microsecond Arduino Code and Schematics accompanying the paper presented in IEEE VR 2020 “Measuring System Visual Latency through Cognitive Latency on Video See-Through AR devices” by Robert Gruen, Eyal Ofek, Antony Steed †, Ran Gal, Mike Sinclair, and Mar Gonzalez-Franco.